by Louis Freeland-Haynes

26 March 2024

Writing code plays a big part in the graduate work of students across many fields, but particularly in the sciences, where code is often a substantial component of graduate students’ work and education, as well as an important research output. In recent years, large-language models have become increasingly capable of writing and interpreting code, and since June 2022 students worldwide have had free access to GitHub Copilot, an AI pair programming tool with a startling ability to write code. Copilot is a descendent of the well-known large language model GPT-3, trained on billions of lines of source code from GitHub.

Coding with the assistance of an AI pair programmer like Copilot can feel like a radically different process. When used in an integrated development environment (IDE) like Visual Studio, Copilot looks over your existing code and provides automatic suggestions for current and future lines of code whilst you write, just like auto suggestion tools do for emails or SMS messages.

From the perspective of the user, getting the most useful suggestions requires learning how to carefully engineer the model’s responses. This most often takes the form of commenting on your code with clear, specific comments that explain what your code is doing, and exactly what you intend to do next. This idea of prompt engineering, carefully finding the right wording to input to a model in order to get a useful output, is one common to all models that take language as input.

An under-appreciated implication of this prompt engineering process is that it can lead to better coding practices. To get the most out of Copilot, you often end up spending time thinking ahead about how to structure your code and making comments that will improve its clarity and interpretability.

The assistance of an AI pair programmer is not, however, a panacea for a newcomer to programming. Just as other large language models “hallucinate” facts, models like Copilot produce faulty code, using variables or functions that don’t exist. The most dangerous errors, however, are those silent or logical errors that don’t confront you with error messages but will do something subtly different to what you intended. The need for an informed user behind the keyboard is very much still present.

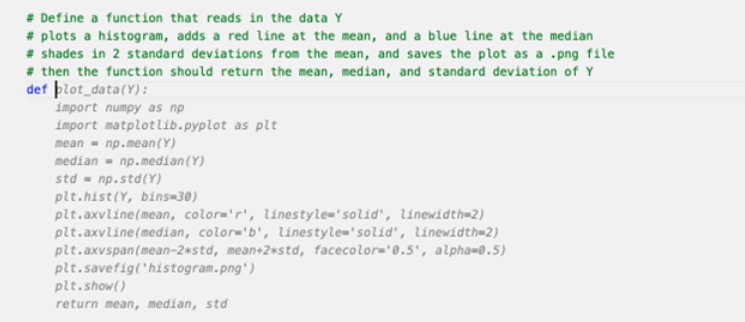

The difference is that just as the advent of search tools like Google changed the kinds of information we prioritize remembering, large language modeling tools do so with coding. Struggling to remember exactly what the API is for that plotting software package, or how to add a dashed vertical line on your plot at the mean? It probably doesn’t matter. Just prompt Copilot appropriately and it will most likely get it right in less than a second—like in the picture below.

Large-language models can thus take away a lot of the mental effort involved in writing rote, boilerplate code or doing data manipulation. Making good use of them means that you have more time to spend on the more intellectually rewarding aspects of graduate work.

A screenshot by the author who was feeling lazy about plotting data - everything in gray was auto-suggested by GitHub Copilot.